Open Source Intelligence (OSINT) has become a cornerstone of modern intelligence work — from cyber threat analysis to corporate due diligence and investigative journalism. With a wealth of publicly available information just a few clicks away, the real challenge no longer lies in accessing data, but in determining its value.

Not all sources are equal, and not all information should be trusted at face value. In an age of misinformation, spoofed identities, and manipulated content, the ability to critically evaluate OSINT is essential. Whether you’re conducting research for a security operation or building a threat profile, understanding how to assess the credibility, accuracy, and relevance of your findings is what turns raw data into actionable intelligence.

In this blog, we’ll explore why evaluation is such a crucial stage in the OSINT process, introduce key criteria and techniques for assessing intelligence, and provide practical advice to help you strengthen your evaluation skills.

Why Evaluation Matters in OSINT

The open nature of OSINT is both its greatest strength and its biggest vulnerability. While the accessibility of public data allows for rich and diverse intelligence gathering, it also means the information collected can be incomplete, misleading, outdated, or deliberately false. Without rigorous evaluation, even the most promising-looking data can lead analysts down the wrong path.

In security contexts, acting on flawed intelligence can have serious consequences — from reputational damage and wasted resources to operational failure or legal risk. A single unverified claim from an untrustworthy source can compromise an entire investigation or response effort.

It’s also important to distinguish between data, information, and intelligence. OSINT collection yields data — raw, unprocessed facts. When those facts are organised and given context, they become information. But it’s only through evaluation — the process of assessing accuracy, reliability, and relevance — that information is transformed into intelligence that decision-makers can act on with confidence.

In short, evaluation is what separates noise from insight. It’s not just a good practice — it’s a critical step that determines the overall value and credibility of your intelligence output.

Core Evaluation Criteria

Evaluating OSINT effectively requires a structured approach. Rather than relying on gut instinct or assumptions, analysts should assess each piece of information against a set of established criteria. This ensures consistency, reduces bias, and increases the likelihood that your final intelligence product will be trusted and actionable.

Here are five key criteria that can guide your evaluation process:

1. Relevance

Does the information directly relate to your intelligence requirement or objective? OSINT can be full of interesting but tangential details. Focusing only on what is relevant ensures your analysis remains targeted and efficient.

2. Reliability

Is the source trustworthy? Consider the origin of the data — is it a reputable website, a verified account, or a known organisation? Or is it an anonymous post on a forum with no verifiable backing? The credibility of the source often dictates the reliability of the information it provides.

3. Accuracy

Is the information factually correct? Has it been corroborated by other sources? Are there inconsistencies, errors, or signs of manipulation? Verifying accuracy is especially important when dealing with fast-moving events or user-generated content.

4. Timeliness

Is the data current? Outdated information can skew your analysis, particularly in areas like cybersecurity or geopolitical monitoring where things change rapidly. Always check publication dates and consider whether the information still reflects the present reality.

5. Objectivity

Is the content neutral, or does it show bias? Be wary of emotionally charged language, persuasive tone, or content designed to provoke. Identifying whether the source has an agenda can help you judge how much weight to give the information.

Using the Admiralty Code

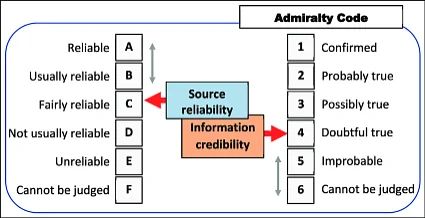

One widely recognised method for evaluating sources and information is the Admiralty Code, also known as the NATO Source Reliability and Information Credibility grading system. It uses a two-part alphanumeric rating to assess:

- Source Reliability (A–F) – how dependable the source is based on past performance, access to information, and known biases.

- Information Credibility (1–6) – how believable the information is, based on corroboration, plausibility, and consistency with known facts.

For example, a rating of A1 indicates a highly reliable source providing confirmed information, while E5 might flag a questionable source offering unconfirmed or implausible content. While originally designed for military intelligence, the Admiralty Code can be adapted to OSINT workflows to provide a quick yet effective way of scoring confidence in your findings.

By combining the Admiralty Code with the core evaluation criteria above, analysts can create a more transparent, defensible assessment process that supports better decision-making.

Source Evaluation Techniques

Once you’ve identified what you’re looking for and established your evaluation criteria, the next step is to put those principles into practice. Evaluating sources effectively requires both critical thinking and a methodical approach. Below are some techniques that can help analysts assess the credibility, authenticity, and relevance of open source material.

1. Corroboration Across Multiple Sources

One of the most effective ways to validate information is through corroboration. Can the same information be found across multiple independent, reputable sources? If different, unrelated sources are reporting the same facts, confidence in the information naturally increases. Be mindful, however, of information echo chambers — where multiple outlets are simply republishing or citing the same original (and possibly flawed) source.

2. Trace the Original Source

Always seek the original source of information rather than relying on summaries, screenshots, or secondary reporting. When analysing a news story, forum post, or leaked document, trace it back to its origin to assess context, authenticity, and potential manipulation. Metadata, timestamps, and file properties can offer valuable clues in verifying source integrity.

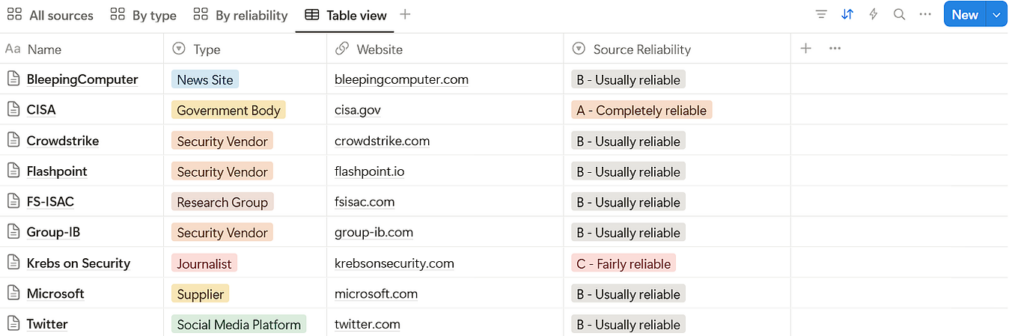

3. Use of Source Grading Systems

Incorporating a formal source grading system, such as the Admiralty Code, adds structure to your evaluation. Assigning a reliability and credibility rating to each source not only helps prioritise information but also makes your intelligence product more transparent and defensible.

4. Evaluate Digital Footprints

For online content, take time to assess the digital presence of the source. Does a social media profile show a consistent identity over time, or does it exhibit signs of automation or inauthentic behaviour? Techniques such as reverse image searches, domain registration checks (WHOIS), and historical snapshots (via the Wayback Machine) can help verify source history and legitimacy.

5. Consider the Source’s Motivation and Bias

Understanding why a source is publishing certain information can help contextualise its reliability. Is the content investigative, promotional, political, or satirical? Is it user-generated or professionally produced? Analysing tone, language, and publication history can reveal bias or intent that may affect credibility.

6. Balance Automation with Human Judgement

While automated tools like browser plugins, scraping utilities, and AI classifiers can assist in sorting and filtering OSINT, human evaluation remains essential. Algorithms can flag suspicious patterns, but they may miss nuance, satire, or contextual subtleties. The most effective OSINT analysts use tools to support — not replace — critical thinking.

By applying these techniques consistently, analysts can reduce the risk of misinformation, increase the quality of their assessments, and build intelligence that decision-makers can trust. Evaluation isn’t just a stage in the process — it’s an ongoing discipline throughout the lifecycle of any OSINT investigation.

Practical Tips for Evaluators

Even with a solid framework and a set of reliable techniques, OSINT evaluation often comes down to the fine details — the subtle clues, the consistency checks, and the instinct honed by experience. This section offers practical, hands-on advice to help you refine your evaluation skills and avoid common pitfalls.

1. Keep an Evaluation Log

Maintain a record of how you’ve assessed each source — including decisions around credibility, context, and any verification steps taken. This is especially important in collaborative environments or when intelligence may need to be defended later. Tools like analyst notebooks, spreadsheets, or structured databases can help you track this clearly.

2. Use Source Checklists

Create a simple checklist to run through each time you assess a source. This could include prompts like:

- Does the source have a known history or digital presence?

- Is the information supported by others?

- Can I identify any potential bias?

- What’s the Admiralty Code rating?

Having a repeatable checklist reduces oversight and builds consistency in your process.

3. Beware of Confirmation Bias

It’s easy to give more weight to information that aligns with your assumptions or desired outcomes. Make a conscious effort to challenge your own conclusions by seeking contradictory or alternative views. A good analyst considers what’s missing, not just what’s present.

4. Apply Lateral Reading

When evaluating websites or media content, use lateral reading — that is, open other tabs to research the author, domain, or claims from outside sources rather than staying within the original source’s ecosystem. This is especially useful when verifying unfamiliar outlets or detecting disinformation.

5. Factor in Context and Culture

Context matters. A piece of content that appears misleading may be satire, a mistranslation, or culturally specific. Understanding the context in which content was created — including language, location, and intended audience — can significantly impact how it should be interpreted and evaluated.

6. Treat OSINT Like Evidence

Approach OSINT evaluation with the same care and scrutiny as if you were handling physical evidence. Every claim should be backed by verification or flagged as unconfirmed. If there are gaps or assumptions, make them explicit. This rigour supports better intelligence products and protects your credibility as an analyst.

While critical thinking is at the heart of any good OSINT evaluation, the right tools can streamline your workflow, support verification, and uncover valuable context. These tools don’t replace human judgement — but they do enhance your ability to assess the reliability, credibility, and relevance of open source material.

Below is a selection of tools, grouped by function, that can support your evaluation efforts:

Source Verification and Reputation

- WHOIS Lookup (e.g. Whois.domaintools.com, ViewDNS.info)

Check domain registration details to assess how long a site has been active and who owns it. - Wayback Machine (archive.org)

View historical versions of web pages to track changes or confirm the existence of content at a given time. - DomainTools Iris or RiskIQ PassiveTotal

More advanced tools for investigating infrastructure, subdomains, and digital footprints of websites.

Media and Content Verification

- Google Reverse Image Search / TinEye / Yandex

Check whether images are original or reused across different contexts, possibly indicating misinformation. - InVID / WeVerify Toolkit

Useful for verifying videos and images from social media, checking for manipulation or date/location mismatches. - Metadata Extractors (e.g. ExifTool)

Analyse image and file metadata to identify origin, device, and timestamps — where available.

Social Media Evaluation

- Account Analysis Tools (e.g. WhoisThisProfile, Social Searcher)

Evaluate the activity and legitimacy of social media accounts by checking post history, bio details, and follower patterns. - Hoaxy

Visualises how information spreads across Twitter — useful for identifying echo chambers, bots, or coordinated disinformation.

Information Cross-Referencing

- Google Advanced Search / Operators

Use search modifiers (like site:, intitle:, or filetype:) to hone in on credible or official sources. - OSINT Framework (osintframework.com)

Not a tool itself, but a curated directory of tools and resources for various OSINT tasks — including evaluation and verification.

Structured Evaluation and Analysis

- Maltego

Helps visualise and map relationships between entities (people, domains, IPs, etc.), useful for contextualising source networks. - Hunchly

A browser plugin that automatically captures and logs every page you visit, supporting transparency and traceability in your investigations. - IntelTechniques Workbook / Casefile

Structured templates and tools from the OSINT community that support methodical evaluation and reporting.

Case Study: Misidentification in the Boston Marathon Bombing

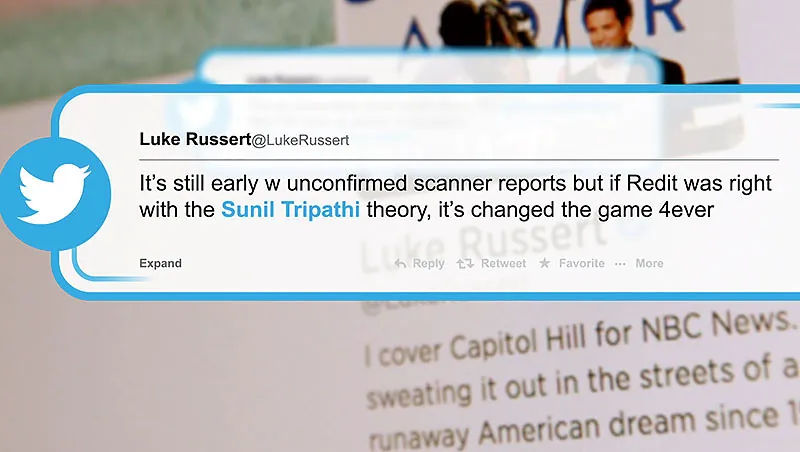

The 2013 Boston Marathon bombing provides a powerful example of how poor OSINT evaluation can lead to serious consequences. In the immediate aftermath of the attack, online communities — particularly Reddit — attempted to crowdsource intelligence to help identify the perpetrators.

The OSINT Effort

Amateur investigators analysed photos, videos, and social media posts to spot “suspicious” individuals in the crowd. One person in particular, Sunil Tripathi, a missing university student, was misidentified as a suspect based on vague visual similarities and unverified assumptions.

Reddit threads, Twitter posts, and even some journalists picked up on the speculation, causing his name and photo to circulate rapidly online. This led to distress for his family, public confusion, and the further spread of misinformation.

What Went Wrong?

- No Source Validation: The photos used were low-resolution and out of context. No effort was made to verify the original source or timestamp.

- Lack of Corroboration: Claims were amplified without independent verification or official confirmation.

- Confirmation Bias: Users were looking for someone who looked like they could be a suspect, rather than critically evaluating the data.

- Absence of a Structured Framework: There was no use of a system like the Admiralty Code to assess source reliability or information credibility.

The Impact

Authorities later confirmed that Tripathi had no involvement in the bombing — he had sadly died by suicide prior to the attack. The incident highlighted how untrained use of OSINT and failure to properly evaluate information can lead to serious reputational harm, emotional trauma, and the derailment of actual investigations.

This case shows that while open source intelligence can be powerful, it must be used responsibly. Without evaluation, it’s just noise — and in high-stakes situations, that noise can do real damage.

Conclusion: Evaluation Is the Heart of Effective OSINT

Open source intelligence has become a cornerstone of modern investigations, from cybersecurity and law enforcement to journalism and corporate risk. But the sheer volume of available information means that gathering data is no longer the hard part — evaluating it is.

As we’ve seen, the effectiveness of OSINT hinges not on what you collect, but on how you assess it. Poorly evaluated intelligence can mislead, cause harm, or result in missed opportunities. In contrast, well-evaluated OSINT builds clarity, confidence, and strategic value.

Whether you’re using the Admiralty Code, applying structured frameworks, or leveraging specialised tools, the goal remains the same: to produce intelligence that is accurate, reliable, and actionable. Evaluation isn’t a final step in the OSINT process — it’s woven throughout.

In an age where misinformation spreads faster than truth, the ability to critically evaluate open source material isn’t just a skill — it’s a responsibility.

Header Photo by Mike Kononov on Unsplash, balance Photo by Jeremy Thomas on Unsplash and tools Photo by Immo Wegmann on Unsplash.